📌 MAROKO133 Breaking ai: Google’s Willow chip to give UK scientists first taste of

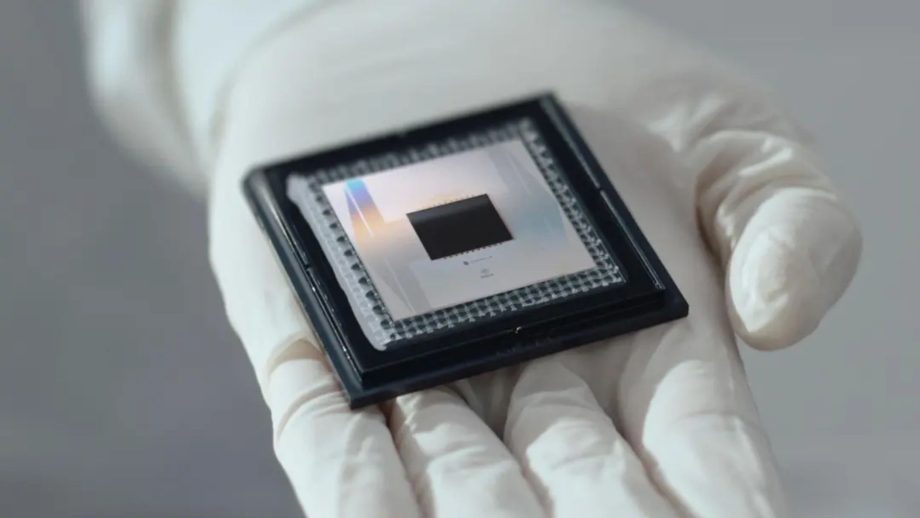

Google is partnering with the UK’s national lab for quantum computing to invite researchers to develop applications for its most powerful processor, the Willow chip.

These research areas include material science, chemistry, medicine, and life sciences.

Under this initiative, the UK’s National Quantum Computing Centre (NQCC) has partnered with Google Quantum AI to expand access to the Willow quantum processor for more UK researchers.

UK researchers are encouraged to submit proposals to access the Willow processor. It is known for its state-of-the-art technology and world-leading error correction capabilities.

The partnership is designed to uncover new real-world applications across disciplines by solving problems that classical computers currently cannot handle.

“Access to this new resource from Google will help keep Britain’s quantum innovators at the cutting edge, bolstering their efforts to put quantum to work in the design of new medicines, the shift to clean, affordable energy, and more. All of this work is crucial to this Government’s mission of national renewal,” said Lord Vallance, UK Science Minister, in a press release on December 12.

Willow’s power

Quantum devices operate on principles fundamentally distinct from the classical computers found in our smartphones and laptops.

Classical computers store information as simple binary bits (0 or 1).

Willow, a superconducting quantum processor unveiled in December 2024, operates on qubits. It taps into particle-physics phenomena such as superposition and entanglement to process an infinite number of possibilities simultaneously.

The system’s potential power increases exponentially with each additional qubit.

Willow’s performance is staggering. It has already solved a key challenge in quantum error correction, a problem pursued for almost 30 years. Particularly, the chip reduces errors as the system scales up with more qubits.

In a benchmark test, the chip completed a standard computation in under five minutes. For perspective, that same task is estimated to take one of the world’s fastest supercomputers an unfathomable “10 septillion” years — a duration vastly exceeding the age of the Universe.

UK collaboration

UK researchers are invited to submit innovative proposals; those selected will collaborate closely with specialists from Google and the NQCC to design and conduct their experiments using the processor.

The collaboration arrives as the global quantum race intensifies, with rivals like Amazon and IBM developing their own technologies.

The BBC reported that the UK is a major player in the quantum industry. Firms like Quantinuum are valued at a massive $10 billion as of September.

Moreover, the National Quantum Computing Centre currently hosts seven quantum computers from British-based firms like Quantum Motion, ORCA, and Oxford Ionics.

To support this key area of the UK’s Industrial Strategy, the government is committing £670 million, with officials estimating that quantum technology could contribute £11 billion to the UK economy by 2045.

Meanwhile, Willow has already been used in academic studies.

For instance, in September 2025, scientists successfully observed a never-before-seen exotic phase of matter using its 58-qubit configuration.

Moreover, Google’s Quantum AI team has introduced a new algorithm called Quantum Echoes, which they claim could accelerate quantum computing. It could aid the design of improved drugs, catalysts, polymers, and batteries.

🔗 Sumber: interestingengineering.com

📌 MAROKO133 Hot ai: OpenAI's GPT-5.2 is here: what enterprises need to know W

The rumors were true: OpenAI on Thursday announced the release of its new frontier large language model (LLM) family, GPT-5.2.

It comes at a pivotal moment for the AI pioneer, which has faced intensifying pressure since rival Google’s Gemini 3 LLM seized the top spot on major third-party performance leaderboards and many key benchmarks last month, though OpenAI leaders stressed in a press briefing that the timing of this release had been discussed and worked on well in advance of the release of Gemini 3.

OpenAI describes GPT-5.2 as its "most capable model series yet for professional knowledge work," aiming to reclaim the performance crown with significant gains in reasoning, coding, and agentic workflows.

"It’s our most advanced frontier model and the strongest yet in the market for professional use," Fidji Simo, OpenAI’s CEO of Applications, said during a press briefing today. "We designed 5.2 to unlock even more economic value for people. It's better at creating spreadsheets, building presentations, writing code, perceiving images, understanding long context, using tools, and handling complex, multi-step projects."

GPT-5.2 features a massive 400,000-token context window — allowing it to ingest hundreds of documents or large code repositories at once — and a 128,000 max output token limit, enabling it to generate extensive reports or full applications in a single go.

The model also features a knowledge cutoff of August 31, 2025, ensuring it is up-to-date with relatively recent world events and technical documentation. It explicitly includes "Reasoning token support," confirming the underlying architecture uses the chain-of-thought processing popularized by the "o1" series.

The 'Code Red' Reality Check

The release arrives following The Information's report of an emergency "Code Red" directive to OpenAI staff from CEO Sam Altman to improve ChaTGPT — a move reportedly designed to mobilize resources following the "quality gap" exposed by Gemini 3. The Verge similarly reported on the timing of GPT-5.2's release ahead of the official announcement.

During the briefing, OpenAI executives acknowledged the directive but pushed back on the narrative that the model was rushed solely to answer Google.

"It is important to note this has been in the works for many, many months," Simo told reporters. She clarified that while the "Code Red" helped focus the company, it wasn't the sole driver of the timeline.

"We announced this Code Red to really signal to the company that we want to marshal resources in one particular area… but that's not the reason it's coming out this week in particular."

Max Schwarzer, lead of OpenAI's post-training team, echoed this sentiment to dispel the idea of a panic launch. "We've been planning for this release since a very long time ago… this specific week we talked about many months ago."

A spokesperson from OpenAI further clarified that the "Code Red" call applied to ChatGPT as a product, not solely underlying model development or the release of new models.

Under the Hood: Instant, Thinking, and Pro

OpenAI is segmenting the GPT-5.2 release into three distinct tiers within ChatGPT, a strategy likely designed to balance the massive compute costs of "reasoning" models with user demand for speed:

-

GPT-5.2 Instant: Optimized for speed and daily tasks like writing, translation, and information seeking.

-

GPT-5.2 Thinking: Designed for "complex, structured work" and long-running agents, this model leverages deeper reasoning chains to handle coding, math, and multi-step projects.

-

GPT-5.2 Pro: The new heavyweight champion. OpenAI describes this as its "smartest and most trustworthy option," delivering the highest accuracy for difficult questions where quality outweighs latency.

For developers, the models are available immediately in the application programming interface (API) as gpt-5.2, gpt-5.2-chat-latest (Instant), and gpt-5.2-pro.

The Numbers: Beating the Benchmarks

The GPT-5.2 release includes leading metrics across most domains — specifically those that target the "professional knowledge work" gap where competitors have recently gained ground.

OpenAI highlighted a new benchmark called GDPval, which measures performance on "well-specified knowledge work tasks" across 44 occupations.

"GPT-5.2 Thinking is now state-of-the-art on that benchmark… and beats or ties top industry professionals on 70.9% of well-specified professional tasks like spreadsheets, presentations, and document creation, according to expert human judges," Simo said.

In the critical arena of coding, OpenAI is claiming a decisive lead. Schwarzer noted that on SWE-bench Pro, a rigorous evaluation of real-world software engineering, GPT-5.2 Thinking sets a new state-of-the-art score of 55.6%.

He emphasized that this benchmark is "more contamination resistant, challenging, diverse, and industrially relevant than previous benchmarks like SWE-bench Verified."Other key benchmark results include:

-

GPQA Diamond (Science): GPT-5.2 Pro scored 93.2%, edging out GPT-5.2 Thinking (92.4%) and surpassing GPT-5.1 Thinking (88.1%).

-

FrontierMath: On Tier 1-3 problems, GPT-5.2 Thinking solved 40.3%, a significant jump from the 31.0% achieved by its predecessor.

-

ARC-AGI-1: GPT-5.2 Pro is reportedly the first model to cross the 90% threshold on this general reasoning benchmark, scoring 90.5%

The Price of Intelligence

Performance comes at a premium. While ChatGPT subscription pricing remains unchanged for now, the API costs for the new flagship models are steep compared to previous generations, reflecting the high compute demands of "thinking" mode. They're also on the upper-end of API costs for the industry.

-

GPT-5.2 Thinking: Priced at $1.75 per 1 million input tokens and $14 per 1 million output tokens.

-

GPT-5.2 Pro: The costs jump significantly to $21 per 1 million input tokens and $168 per 1 million output tokens.

GPT-5.2 Thinking is priced 40% higher in the API than the standard GPT-5.1 ($1.25/$10), signaling that OpenAI views the new reasoning capabilities as a tangible value-add rather than a mere efficiency update.

The high-end GPT-5.2 Pro follows the same pattern, costing 40% more than the previous GPT-5 Pro ($15/$120). While expensive, it still undercuts OpenAI’s most specialized reasoning model, o1-pro, which remains the most costly offering on the menu at a staggering $150 per million input tokens and $600 per million output tokens.

OpenAI argues that despite the higher per-token cost, the model’s "greater token efficiency" and ability to solve tasks in fewer turns make it economically viable for high-value enterprise workflows.

Here's how it compares to the current API costs for other competing models across the LLM field:

|

Model |

Input (/1M) |

Outpu… Konten dipersingkat otomatis. 🔗 Sumber: venturebeat.com 🤖 Catatan MAROKO133Artikel ini adalah rangkuman otomatis dari beberapa sumber terpercaya. Kami pilih topik yang sedang tren agar kamu selalu update tanpa ketinggalan. ✅ Update berikutnya dalam 30 menit — tema random menanti! |