📌 MAROKO133 Eksklusif ai: Adobe Research Unlocking Long-Term Memory in Video World

Video world models, which predict future frames conditioned on actions, hold immense promise for artificial intelligence, enabling agents to plan and reason in dynamic environments. Recent advancements, particularly with video diffusion models, have shown impressive capabilities in generating realistic future sequences. However, a significant bottleneck remains: maintaining long-term memory. Current models struggle to remember events and states from far in the past due to the high computational cost associated with processing extended sequences using traditional attention layers. This limits their ability to perform complex tasks requiring sustained understanding of a scene.

A new paper, “Long-Context State-Space Video World Models” by researchers from Stanford University, Princeton University, and Adobe Research, proposes an innovative solution to this challenge. They introduce a novel architecture that leverages State-Space Models (SSMs) to extend temporal memory without sacrificing computational efficiency.

The core problem lies in the quadratic computational complexity of attention mechanisms with respect to sequence length. As the video context grows, the resources required for attention layers explode, making long-term memory impractical for real-world applications. This means that after a certain number of frames, the model effectively “forgets” earlier events, hindering its performance on tasks that demand long-range coherence or reasoning over extended periods.

The authors’ key insight is to leverage the inherent strengths of State-Space Models (SSMs) for causal sequence modeling. Unlike previous attempts that retrofitted SSMs for non-causal vision tasks, this work fully exploits their advantages in processing sequences efficiently.

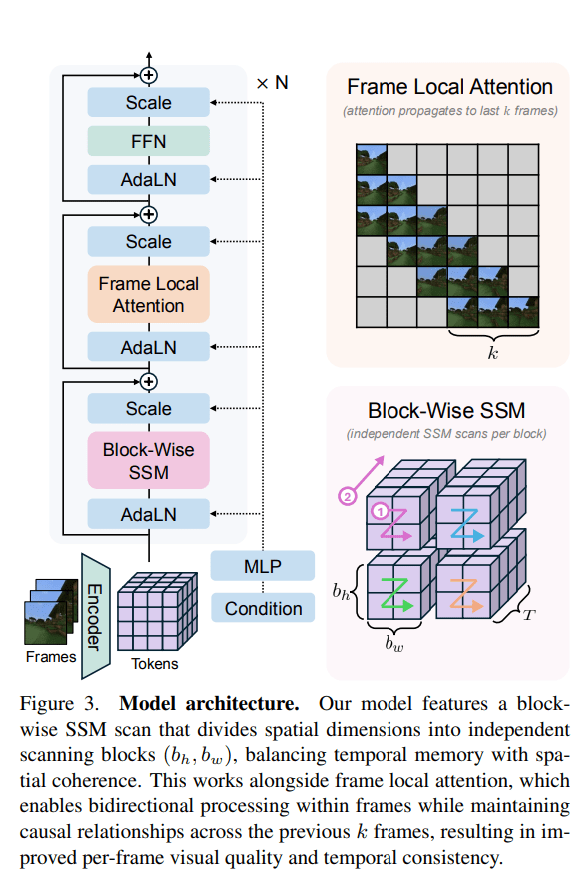

The proposed Long-Context State-Space Video World Model (LSSVWM) incorporates several crucial design choices:

- Block-wise SSM Scanning Scheme: This is central to their design. Instead of processing the entire video sequence with a single SSM scan, they employ a block-wise scheme. This strategically trades off some spatial consistency (within a block) for significantly extended temporal memory. By breaking down the long sequence into manageable blocks, they can maintain a compressed “state” that carries information across blocks, effectively extending the model’s memory horizon.

- Dense Local Attention: To compensate for the potential loss of spatial coherence introduced by the block-wise SSM scanning, the model incorporates dense local attention. This ensures that consecutive frames within and across blocks maintain strong relationships, preserving the fine-grained details and consistency necessary for realistic video generation. This dual approach of global (SSM) and local (attention) processing allows them to achieve both long-term memory and local fidelity.

The paper also introduces two key training strategies to further improve long-context performance:

- Diffusion Forcing: This technique encourages the model to generate frames conditioned on a prefix of the input, effectively forcing it to learn to maintain consistency over longer durations. By sometimes not sampling a prefix and keeping all tokens noised, the training becomes equivalent to diffusion forcing, which is highlighted as a special case of long-context training where the prefix length is zero. This pushes the model to generate coherent sequences even from minimal initial context.

- Frame Local Attention: For faster training and sampling, the authors implemented a “frame local attention” mechanism. This utilizes FlexAttention to achieve significant speedups compared to a fully causal mask. By grouping frames into chunks (e.g., chunks of 5 with a frame window size of 10), frames within a chunk maintain bidirectionality while also attending to frames in the previous chunk. This allows for an effective receptive field while optimizing computational load.

The researchers evaluated their LSSVWM on challenging datasets, including Memory Maze and Minecraft, which are specifically designed to test long-term memory capabilities through spatial retrieval and reasoning tasks.

The experiments demonstrate that their approach substantially surpasses baselines in preserving long-range memory. Qualitative results, as shown in supplementary figures (e.g., S1, S2, S3), illustrate that LSSVWM can generate more coherent and accurate sequences over extended periods compared to models relying solely on causal attention or even Mamba2 without frame local attention. For instance, on reasoning tasks for the maze dataset, their model maintains better consistency and accuracy over long horizons. Similarly, for retrieval tasks, LSSVWM shows improved ability to recall and utilize information from distant past frames. Crucially, these improvements are achieved while maintaining practical inference speeds, making the models suitable for interactive applications.

The Paper Long-Context State-Space Video World Models is on arXiv

The post Adobe Research Unlocking Long-Term Memory in Video World Models with State-Space Models first appeared on Synced.

🔗 Sumber: syncedreview.com

📌 MAROKO133 Hot ai: 500x more powerful: US’ new GridEdge Analyzer processes 60,000

Researchers have developed a secure, affordable sensing device that delivers unprecedented real-time insight into electric grid behavior. Called a Universal GridEdge Analyzer, the compact GridEdge Analyzer is developed by researchers from University of Tennessee and ORNL.

The compact GridEdge Analyzer can be embedded into power electronics or even plugged into a wall outlet to measure the smallest changes in electrical voltage and current.

60,000 measurements per second

Researchers revealed that the compact analyzer records the smallest changes in electrical voltage and current as waveforms, then almost instantly compresses, encrypts and streams the data to centralized servers. Processing 60,000 measurements per second – 500 times more than the previous technology – it can capture split-second reactions from power electronics that help run today’s grid.

“Unlike traditional centralized power plants, data centers and distributed energy plants with batteries use power electronics to connect to the grids. Those power electronics can switch very quickly,” said Yilu Liu, lead researcher and UT-ORNL Governor’s Chair for Power Electronics.

“Their fast-acting nature can impact the stability of the entire grid, so monitoring these dynamics helps us improve future grid operations, keeping the lights on for everyone.”

Device delivers more detailed information at faster speeds

The technology builds on UT’s long-running grid frequency monitoring network called FNET/GridEye. That network’s 200 sensors across the U.S. and about 100 more worldwide collect and transmit aggregated data for a broad overview of grid activity. The new device delivers more detailed information at faster speeds, capturing incidents that earlier technology would have missed. Designed for flexibility, it can be embedded in power electronics, installed on a distribution line or even plugged into a wall outlet, according to a press release.

Utilities in places like Hawaii and Texas are using the device to understand how concentrations of power electronics interact with the grid. For example, at AI data centers, even minor voltage fluctuations can trigger a switch to backup power, requiring immediate action to control the energy load. The analyzer can help operators anticipate and navigate these episodes to maintain stable operations, as per the release.

Real-time analyzers

Wide Area Monitoring Systems (WAMS) use real-time analyzers to observe grid dynamics across entire regions. Operators gain visibility into oscillations, congestion, and system-wide stability. At the local level, analyzers help utilities manage distributed energy resources (DERs) such as rooftop solar, battery storage, and microgrids, ensuring smooth coordination between customers and the grid.

Predictive analytics identify equipment likely to fail, allowing utilities to shift from time-based maintenance to condition-based maintenance, reducing costs and improving safety.

Utilities across the U.S. are increasingly investing in real-time analytics platforms offered by major technology and energy companies. These systems often integrate with existing SCADA and energy management systems, ensuring continuity while upgrading capabilities.

The trend shows that the real-time insight is becoming a standard requirement, not a premium feature, for grid operations.

As digital technologies continue to evolve, real-time grid analyzers will become even more capable. Future developments are expected to include automated decision-making, advanced grid simulations, and deeper integration of distributed energy resources.

🔗 Sumber: interestingengineering.com

🤖 Catatan MAROKO133

Artikel ini adalah rangkuman otomatis dari beberapa sumber terpercaya. Kami pilih topik yang sedang tren agar kamu selalu update tanpa ketinggalan.

✅ Update berikutnya dalam 30 menit — tema random menanti!