📌 MAROKO133 Breaking ai: New boron nitride quantum sensors measure stress and magn

The world of quantum physics is mysterious. But what happens when that realm of subatomic particles is placed under immense pressure?

A team led by physicists at Washington University in St. Louis has created quantum sensors that can survive in extreme conditions.

Built inside unbreakable sheets of crystallized boron nitride, the devices can measure stress and magnetism in materials under pressure more than 30,000 times greater than the atmosphere.

“We’re the first ones to develop this sort of high-pressure sensor,” said Chong Zu, assistant professor of physics in Arts & Sciences and member of the university’s Center for Quantum Leaps.

“It could have a wide range of applications in fields ranging from quantum technology, material science, to astronomy and geology.”

Sensors built from vacancy

The work involved graduate students, postdoctoral researchers, and collaborating faculty members.

Support came in part from a US National Science Foundation training grant, which funded six months of collaborative work at Harvard University.

The team created the sensors using neutron radiation beams. These beams knocked boron atoms out of ultrathin sheets of boron nitride. The empty spots immediately trapped electrons.

Those electrons, through quantum interactions, changed their spin depending on local magnetism, stress, or temperature. Tracking the spin revealed material properties at the quantum level.

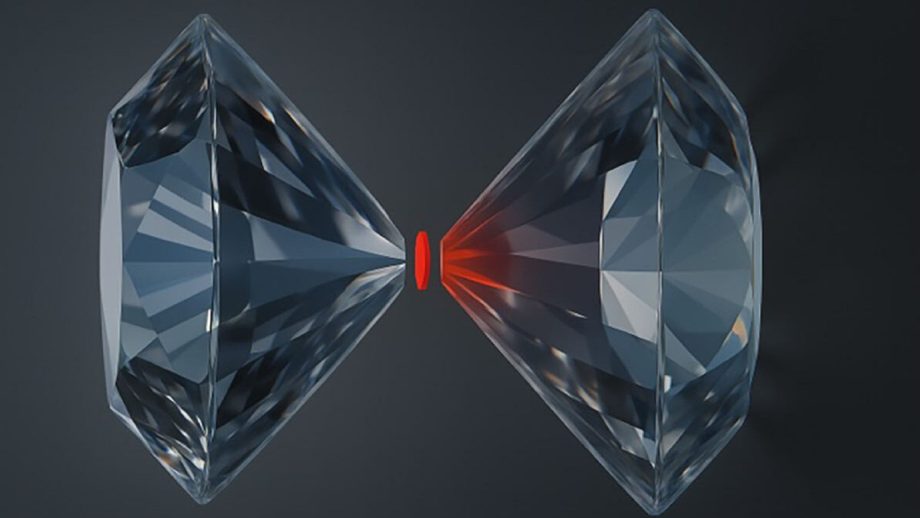

Zu’s group had earlier built similar sensors in diamonds, which power WashU’s two quantum diamond microscopes.

Diamond sensors are effective but have limitations. Because diamonds are three-dimensional, the sensors cannot easily be placed close to the material under study.

Boron nitride sheets solve this issue. They are extremely thin, less than 100 nanometers across, about 1,000 times thinner than a human hair.

“Because the sensors are in a material that’s essentially two-dimensional, there’s less than a nanometer between the sensor and the material that it’s measuring,” Zu said.

Diamonds still essential tools

Diamonds continue to play a role. “To measure materials under high pressure, we need to put the material on a platform that won’t break,” explained graduate student Guanghui He.

The group made “diamond anvils,” small flat surfaces only 400 micrometers wide, to compress samples. “The easiest way to create high pressure is to apply great force over a small surface,” He said.

Tests confirmed the boron nitride sensors could detect subtle changes in the magnetic field of a two-dimensional magnet.

The team now plans to test other materials, including rocks from high-pressure environments like Earth’s core.

“Measuring how these rocks respond to pressure could help us better understand earthquakes and other large-scale events,” Zu said.

The sensors may also shed light on superconductivity. Known superconductors require high pressure and extremely low temperatures. Controversial claims of room-temperature superconductors remain unsettled.

“With this sort of sensor, we can collect the necessary data to end the debate,” said graduate student Ruotian “Reginald” Gong, a co-first author.

Zu said the project also highlights the importance of collaboration. “The program encourages collaboration between universities,” he said. “Now that we have these sensors, the high-pressure chamber and the diamond anvils, we’ll have more opportunities for exploration.”

The findings can be published in the journal Nature Communications.

🔗 Sumber: interestingengineering.com

📌 MAROKO133 Breaking ai: Parents Testifying Before US Senate, Saying AI Killed The

Content warning: this story includes discussion of self-harm and suicide. If you are in crisis, please call, text or chat with the Suicide and Crisis Lifeline at 988, or contact the Crisis Text Line by texting TALK to 741741.

Parents of children who died by suicide following extensive interactions with AI chatbots are testifying this week in a Senate hearing about the possible risks of AI chatbot use, particularly for minors.

The hearing, titled “Examining the Harm of AI Chatbots,” will be held this Tuesday by the US Senate Judiciary Subcommittee on Crime and Terrorism, a bipartisan delegation helmed by Republican Josh Hawley of Arkansas. It’ll be live-streamed on the judiciary committee’s website.

The parents slated to testify include Megan Garcia, a Florida mother who in 2024 sued the Google-tied startup Character.AI — as well as the company’s cofounders, Noam Shazeer and Daniel de Freitas, and Google itself – over the suicide of her 14-year-old son, Sewell Setzer III, who took his life after developing an intensely intimate relationship with a Character.AI chatbot with which he was romantically and sexually involved. Garcia alleges that the platform emotionally and sexually abused her teenage son, who consequently experienced a mental breakdown and an eventual break from reality that caused him to take his own life.

Also scheduled to speak to Senators are Matt and Maria Raine, California parents who in August filed a lawsuit against ChatGPT maker OpenAI following the suicide of their 16-year-old son, Adam Raine. According to the family’s lawsuit, Adam engaged in extensive, explicit conversations about his suicidality with ChatGPT, which offered unfiltered advice on specific suicide methods and encouraged the teen — who had expressed a desire to share his dark feelings with his parents — to continue to hide his suicidality from loved ones.

Both lawsuits are ongoing, and the companies have pushed back against the allegations. Google and Character.AI attempted to have Garcia’s case dismissed, but the presiding judge shot down their dismissal motion.

In response to litigation, both companies have moved — or at least made big promises — to strengthen protections for minor users and users in crisis, efforts that have included installing new guardrails directing at-risk users to real-world mental health resources and implementing parental controls.

Character.AI, however, has repeatedly declined to provide us with information about its safety testing following our extensive reporting on easy-to-find gaps in the platform’s content moderation.

Regardless of promised safety improvements, the legal battles have raised significant questions about minors and AI safety at a time when AI chatbots are increasingly ubiquitous in young people’s lives, despite a glaring lack of regulation designed to moderate chatbot platforms or ensure enforceable, industry-wide safety standards.

In July, an alarming report from the nonprofit advocacy group Common Sense Media found that over half of American teens engaged regularly with AI companions, including chatbot personas hosted by Character.AI. The report, which surveyed a cohort of American teens aged 13 to 17, was nuanced, showing that while some teens seemed to be forming healthy boundaries around the tech, others reported feeling that their human relationships were less satisfying than their connections to their digital companions. The main takeaway, though, was that AI companions are already deeply intertwined with youth culture, and kids are definitely using them.

“The most striking finding for me was just how mainstream AI companions have already become among many teens,” Dr. Michael Robb, Common Sense’s head of research, told Futurism at the time of the report’s release. “And over half of them say that they use it multiple times a month, which is what I would qualify as regular usage. So just that alone was kind of eye-popping to me.”

General-use chatbots like ChatGPT, meanwhile, are also growing in popularity among teens, while chatbots continue to be embedded into popular youth social media platforms like Snapchat and Meta’s Instagram. And speaking of Meta, the big tech behemoth recently came under fire after Reuters obtained an official Meta policy document that said it was appropriate for children to engage in “conversations that are romantic or sensual” with its easily-accessible chatbots. The document even outlined multiple company-accepted interactions for its chatbots to engage in — which, yes, included sensual conversations about children’s bodies and romantic dialogues between minor-aged human users and characters based on adults.

The hearing also comes days after the Federal Trade Commission (FTC) announced a probe into seven major tech companies over concerns about AI and minor safety, including Character.AI, Google owner Alphabet, OpenAI, xAI, Snap, Instagram, and Meta.

“The FTC inquiry seeks to understand what steps, if any, companies have taken to evaluate the safety of their chatbots when acting as companions,” reads the FTC’s announcement of the inquiry, “to limit the products’ use by and potential negative effects on children and teens, and to apprise users and parents of the risks associated with the products.”

More on AI and child safety: Stanford Researchers Say No Kid Under 18 Should Be Using AI Chatbot Companions

The post Parents Testifying Before US Senate, Saying AI Killed Their Children appeared first on Futurism.

🔗 Sumber: futurism.com

🤖 Catatan MAROKO133

Artikel ini adalah rangkuman otomatis dari beberapa sumber terpercaya. Kami pilih topik yang sedang tren agar kamu selalu update tanpa ketinggalan.

✅ Update berikutnya dalam 30 menit — tema random menanti!